Like many technologically oriented people, I am a big fan of high resolution screens. Instinctively I feel that the more pixels there are on the screen, the better (who feels the same way?). And that's definitely a big reason why I've been happy when using the Macbook with the 2880×1800 pixel 15″ screen. While I would have a number of things to say abo the overall software experience (some good things, others .. not so good), in this writeup I will focus on scre resolution matters. Because that one alone has been a fun and educational experience ..

I had learned well in advance that the way this particular laptop works is that while the screen resolution is as high as it is, the user interface is scaled by the operating system by a factor of 2.0 in a way that in terms of physical size, the user experience and all user interface controls are exactly the same as on a "normal” 15″ screen with a 1440×900 resolution; the only difference being that it will be difficult to distinguish individual pixels as they are so small, and therefore everything looks more crisp and sharp. But no extra "desktop space” will come: By and large, the user interface will look exactly the same, only smoother. That's the way the Apple engineers designed it. Now those of you that have worked on developing software for Apple devices (whether iOS or OS X), you may be aware of the mechanism of how this thing works from an application's perspective. Effectively the software framework of these operating systems, in all simplicity, performs a x2 computation for all pixel drawing operations. You tell the OS to draw a rectangle of 10×10 pixels .. And it will draw a rectangle of 20×20 pixels. And so forth. And yes, now those who have read the Apple documentation will correctly point out that they are not called pixels but points. So to be accurate about the previous sentence: You tell the OS to draw a rectangle of 10×10 points .. And it will draw a rectangle of 20×20 pixels. On a normal (non-”retina”) screen, it would consider 1 point 1 pixel and a 10×10 point rectangle would be drawn as 10×10 pixels. Simple enough, right?

Then again, when we look at it, the way this system has been hardwired into the code of the respective platforms (iOS and OS X) has the signs of programmer panic all over it (which, in my observation, would apply to many other parts of these platforms as well, but that's another story ..). The design of the point/pixel scaling solution looks to me like the kind of solution that a programmer would be forced to make when you have been happily hacking away on a program, taken your time, but the deadline is approaching, and you still have accomplished nothing that works .. So at some point, when the sleepless nights get the better of you and when you just cannot stay awake but you know the product demo is in the morning, you'll pound some kind of a hacky piece of code in there, just something, anything, if only I could get this damn thing to display some sharp looking pixels so that the demo will go through (and of course once the demo does go through, the marketing folks are pleased and happy that the product works as intended, and you as the programmer will then be moving on to other tasks, potentially leaving your hacky kludge as the final solution for generations to come) .. And for me the design of Apple's pixel doubling solution really looks like that kind of a solution (now those of you who know better, and/or that may have been there, you are certainly in a better position to enlighten us on if it was really like that or some other way .. It's just that it really looks that way). It is the "I don't have the time to design a good framework for this, but I do need to deliver this now” kind of look. All experienced programmers will surely know exactly what I'm talking about.

So if the 2.0 multiplication factor give 2 times the pixels (in both directions horizontally and vertically, thereby 1 pixel becomes 2 x 2 = 4), then surely a 1.5 factor would give 1.5 times the pixels, right? Or a factor of 3.0 would scale 1 pixel to 9? Hm. Unfortunately it's not quite like that. The information available on the subject does make it clear that it is not just static doubling; OS X has a built-in "resolution independent” scaling feature, you just need to enable it from the system preferences. Yes. Right. Sort of. This one actually took me a little while to find. Because for me, I would like to have the extra space on the desktop. I wouldn't want just double the pixels for smoother rendering (although that's nice also), but I would like to also have more space. I tend to stuff a lot of things on my screen. But for some time, I conceded that it really can't be done. Until I found that under the system preferences app, under displays, one can move the radio button to another setting and the UI will magically transform to show more options (and I tend to advice my students not to design user interfaces that radically change on the fly, as that makes the UI unpredictable and uncomfortable to the user … This would be one sample of such). But anyway, from here one can now choose from five presets for different options on the user interface scaling. Two of the options allow for getting more desktop space.

Yehey? Sure. But then I wanted to check if Eqela apps still work well when changing the scaling settings. And they do. But I found something else that was interesting. The way the whole scaling mechanism works on Apple operating systems (iOS and OSX) is that on "Retina” displays, there is something that we call a "scaling factor” (a floating point value) that gets bumped up to 2.0. On non-retina displays, the value is 1.0. And of course you can guess what it means: It's the points-to-pixels conversion ratio. So one would assume that by adjusting the scaling settings through the system preferences, the scaling factor now changes to non-integral values like 1.5, 2.35, etc. That would make sense, right? A sort of an elegant solution. Yes, it would. But no, that's not how it works. The scaling factor remains at a consistent 2.0, and the system will change the reported resolution instead. So when you choose the "looks like 1280×800″ resolution from the menu, it will give you a scale factor of 2.0 and reports to applications a resolution of 1280×800, while actually behind the scenes probably drawing double the amount of pixels in a 2560×1600 framebuffer! This one is even funkier when you scale the other way: When you choose the "looks like 1920×1200″ option (the default being the 1440×900), you'll get a reported resolution of a total of 3840×2400 (when applying the scale factor). Effectively, in that setting, I have downgraded my 2880×1800 resolution to 1920×1200, but I'm apparently using 30% more memory to display it. A very unique approach indeed .. (Yes, I understand I haven't truly downgraded to 1920×1200, the drawings remain sharp due to the higher "virtual” resolution .. but it's hardly the most appropriate way to do this)

To give this a little bit more background, a quick googling will tell you that Apple has been trying, for a long time, to painfully implement resolution independence in their operating systems (starting from OS X 10.4 in 2005, moving through 10.5 in 2007, with signs of giving up in 10.6 in 2009, and finally ending up throwing the towel in 10.7 in 2011) . In past versions, they had tried to introduce dynamic adjusting of the scaling factor, and it was included as a hidden feature in past versions of OS X. Those who tried it, consistently reported that it doesn't work, it causes UIs to break, and generally so many things break when you adjust the scaling. So finally, Apple dropped the dynamic adjusting of the scaling in OS X 10.7 (they gave up on it), and implemented the hacky solution outlined above, which indeed was first introduced in iOS. That's where they are now. Apple, the technology giant that has more money than the United States government (as I've been told), tried for (at least) six long years to implement resolution independence, but in the end gave up unable to do so. So it seems that this resolution independence thing is a tough nut, no matter how much money you have.

Right now, in Mac OS X 10.8, this pixel doubling thing now somehow works, and as an end-user I now have my high resolution screen and I even got my extra desktop space (but not all of it, by the way; the preferences tool only gives me up to 1920×1200, not the full 2800×1900). So while it may work a little bit in practice, in the controlled environment where it has been set up, from an engineering perspective, the implementation is incredibly complex. And for those application developers who have touched on this, I think you know exactly what I mean. Applying and un-applying the scaling factor correctly in correct places when constructing offscreen bitmaps, applying appropriate transformation matrices when doing custom rendering to account for the pixel doubling, etc. etc. (and while of course many skilled and helpful individuals would surely be ready and willing to provide appropriate Objective-C snippets to do all the above things and to make an app work, it will not reduce the fact that it's incredibly complex). On top of that, Apple has introduced numerous functions and methods to handle these things in the API, some of them marked as more recommended ways than others, and of course the end result shows in developer forums that are being filled with confused programmers (and don't get me started on the @2x icon resources). Surely, one would think this could be done better?

I had an added piece of fun, then, when I popped in some virtual machines on this Macbook. This one is at first purely from an end user perspective. I loaded in VMWare Fusion and installed Windows 7 and Ubuntu 13.04. All went well and they installed in the double-pixelled mode where they looked like they were running as 1440×900 in full screen. So slightly pixelized from what I had gotten used to (looking like the "normal” laptop experience). But luckily, VMWare has included an easily discoverable (at least I discovered it easily) "use full retina display” checkbox under the display settings. When checked, immediately the pixel doubling disappears and the guest OS appears in 1-to-1 pixel mapping, with everything appearing very small and lots and lots of that extra space available: An actual 2880×1800 desktop (which OS X does not allow me to configure). In the cases of both Windows and Ubuntu, I then reconfigured the text rendering from their respective configurations to simply render a little bigger, and within about a minute or so, I had my high resolution "retina” configurations with my extra desktop space on Windows and Ubuntu, and since at this point I still had not discovered the way to do this on OS X, I felt like the guest OS's were better at handling the Mac hardware.

Of course having configured the same on the OS X side of things, all things ended up roughly equal. Then again, the logic of the setup on the guest OS's (Ubuntu and Windows) was just so much simpler: One pixel is one pixel, and the size of objects rendered on screen are dependent of the size in which they were rendered. Makes sense, right? The Apple experience is not like that though.

But this is of course overlooking the perspective of application development. On both my virtual machines, the applications are in no way "retina aware”, which may result in applications that may be somewhat improperly looking. True (but I was amazed of how well the Windows installation scaled everything, including icons, which were consistently rendered in a high resolution manner .. I really didn't expect that, and weirdly I did feel like the Windows really did so much better in handling the retina screen). But while both Ubuntu and Windows did quite well in the scaling, definitely there is something that is needed on the API side of things in properly designing the user experience from an application developer perspective. But surely the complicated OS X / iOS APIs are not the best way to go?

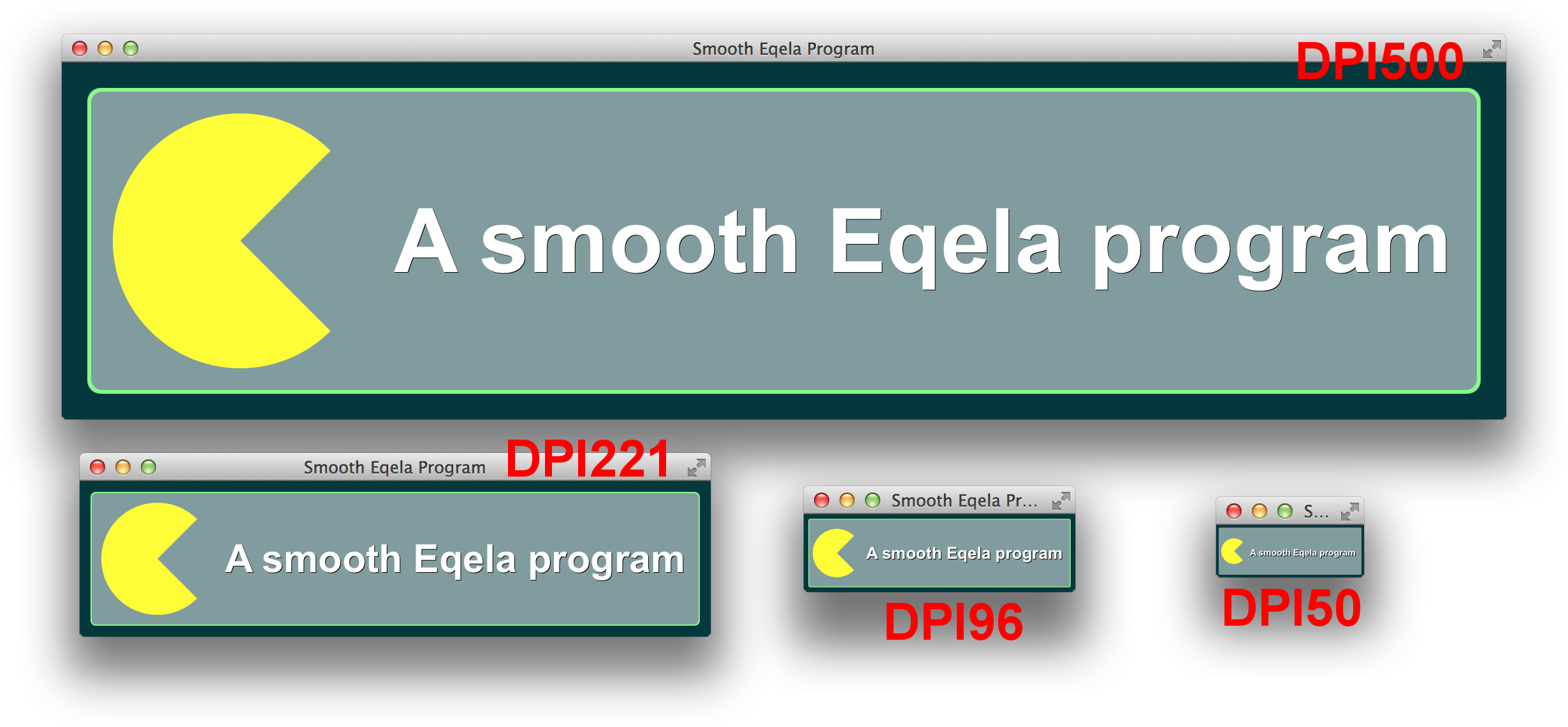

Now with Eqela, I can happily and proudly say that we have done what Apple has tried and failed to do for so many years, and has finally given up trying. Eqela applications are developed in a completely resolution independent manner, and they will draw and render using any number of pixels per inch, and will render smooth curves and lines regardless of the resolution or pixel density, and in the end, wherever the application runs, it can be displayed in a consistent physical size using whatever the number of pixels required to do so, and/or can be scaled up and down as required. And this works on any of the supported platforms, including Apple OS X, iOS, Android, Windows Phone, Blackberry, HTML5, Linux, Windows, etc. (yes, we do resolution scaling better on Apple platforms than Apple themselves do). While Apple wasn't able to make this work even on their own operating systems, despite their vast resources, we've decided to go overboard and make life better for everybody, regardless of what they're using :)

Above you will find a link to the screenshots demonstrating the scaling of a sample application developed with Eqela (just click the picture for a full copy, and depending on your browser, you may need to click it again to zoom all the way in to see the actual pixels in there). That application would display in the exact same physical size on a 500DPI screen (once we'll have those on the market), 221DPI screen (which would be the Macbook Pro 15″ Retina), 96DPI screen, and a 50DPI screen, respectively. You will hopefully notice that the scaling is seamless and the rendering remains smooth in whatever size / resolution / density the application is drawn in. All applications developed this way are thereby now instantly "retina aware”, regardless of the operating system or the display environment where they execute. And everything scales, by the way: Not just text (as is largely the case when configuring Ubuntu and Windows for higher resolution displays), but also images, custom shapes, drawings, spaces between user interface controls; whatever is being displayed. Furthermore, we can accommodate any kinds arbitrary of DPI values / multiplication factors. Ever noticed how Apple tends to only multiply their DPI and/or their screen resolution by 2? Now you know why.

For completeness, below is also the complete code of the Eqela program used to produce the above screenshots. Notice how the sizes are defined in millimeters, not pixels.

public class Main : LayerWidget

{

class ManWidget : Widget

{

public Collection render() {

var manshape = CustomShape.create(

get_width() / 2, get_height() / 2);

var aa1 = (double)(2.0 * MathConstant.M_PI * 45) / 360.0;

var aa2 = (double)(2.0 * MathConstant.M_PI * -405) / 360.0;

manshape.arc(get_width() / 2, get_height() / 2,

get_width() / 2, aa1, aa2);

manshape.line(get_width() / 2, get_height() / 2);

return(LinkedList.create().add(new FillColorOperation()

.set_color(Color.instance("#FFFF00"))

.set_shape(manshape)));

}

}

public void initialize() {

base.initialize();

add(CanvasWidget.for_color(Theme.get_base_color()));

var lw = LayerWidget.instance().set_margin(px("2mm"));

lw.add(CanvasWidget.for_color(Color.instance("#FFFFFF80"))

.set_rounded(true)

.set_outline_color(Color.instance("#80FF80")));

lw.add(HBoxWidget.instance().set_spacing(px("2mm"))

.set_margin(px("2mm"))

.add(new ManWidget().set_size_request(px("20mm"), px("20mm")))

.add(LabelWidget.for_string("A smooth Eqela program")

.set_font(Theme.get_font("7mm bold"))

.set_color(Color.instance("white"))

.set_shadow_color(Color.instance("black")))

);

add(lw);

}

}

At least when looking at this from Cupertino, this would legitimately equate to Developing the Impossible. Let the future begin.